一、CephFS介绍

Ceph File System (CephFS) 是与 POSIX 标准兼容的文件系统, 能够提供对 Ceph 存储集群上的文件访问. Jewel 版本 (10.2.0) 是第一个包含稳定 CephFS 的 Ceph 版本. CephFS 需要至少一个元数据服务器 (Metadata Server – MDS) daemon (ceph-mds) 运行, MDS daemon 管理着与存储在 CephFS 上的文件相关的元数据, 并且协调着对 Ceph 存储系统的访问。

对象存储的成本比起普通的文件存储还是较高,需要购买专门的对象存储软件以及大容量硬盘。如果对数据量要求不是海量,只是为了做文件共享的时候,直接用文件存储的形式好了,性价比高。

二、使用CephFS类型Volume直接挂载

cephfs卷允许将现有的cephfs卷挂载到你的Pod中,与emptyDir类型不同的是,emptyDir会在删除Pod时把数据清除掉,而cephfs卷的数据会被保留下来,仅仅是被卸载,并且cephfs可以被多个设备进行读写。

1、安装 Ceph 客户端

在部署 kubernetes 之前我们就已经有了 Ceph 集群,因此我们可以直接拿来用。但是 kubernetes 的所有节点(尤其是 master 节点)上依然需要安装 ceph 客户端。

yum install -y ceph-common

- 还需要将 ceph 的配置文件

ceph.conf放在所有节点的/etc/ceph目录下

2、创建Ceph secret

- 注意:

ceph_secret.yaml文件中key需要进行base64编码,之后在文件中使用。 - 以下操作需要在Ceph集群中mon节点操作

# 方式1:

[cephu@ceph-node1 ~]$ sudo ceph auth get-key client.admin

AQB+dXxfhmh4LRAAM3Ow+ZdP64Py1N5ZvBgKiA==

[cephu@ceph-node1 ~]$ echo -n "AQB+dXxfhmh4LRAAM3Ow+ZdP64Py1N5ZvBgKiA=="| base64

QVFCK2RYeGZobWg0TFJBQU0zT3crWmRQNjRQeTFONVp2QmdLaUE9PQ==

# 方式2:

[cephu@ceph-node1 ~]$ sudo ceph auth get-key client.admin | base64

QVFCK2RYeGZobWg0TFJBQU0zT3crWmRQNjRQeTFONVp2QmdLaUE9PQ==

- 在K8S-Master节点创建secret文件,

ceph_secret.yaml文件的定义如下:

#首先创建一个名称空间

[root@k8s-master1 ~]# kubectl create ns dev

namespace/dev created

#然后创建secret类型的资源文件

[root@k8s-master1 ~]# vim ceph_secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret

namespace: dev

type: kubernetes.io/rbd

data:

key: QVFCK2RYeGZobWg0TFJBQU0zT3crWmRQNjRQeTFONVp2QmdLaUE9PQ==

- 执行创建ceph_secret资源:

[root@k8s-master1 ~]# kubectl apply -f ceph_secret.yaml

secret/ceph-secret created

3、创建Pod进行挂载

- 定义的文件如下:

[root@k8s-master1 ~]# vim myapp-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: myapp

namespace: dev

spec:

containers:

- name: myapp

image: busybox

command: ["sleep", "60000"]

volumeMounts:

- mountPath: "/mnt/cephfs"

name: cephfs #指定使用的挂载卷名称

volumes:

- name: cephfs #定义挂载卷名称

cephfs: #挂载类型为cephFS

monitors:

- 192.168.66.201:6789 #ceph集群mon节点

- 192.168.66.202:6789

- 192.168.66.203:6789

user: admin #ceph认证用户

path: /

secretRef:

name: ceph-secret #调用ceph认证secret

- 执行创建pod,并挂载cephfs卷:

[root@k8s-master1 ~]# kubectl apply -f myapp-pod.yaml

pod/myapp created

[root@k8s-master1 ~]# kubectl get po -n dev

NAME READY STATUS RESTARTS AGE

myapp 1/1 Running 0 94s

三、使用PV&PVC方式进行数据卷挂载

1、创建PV

- 定义的文件如下:

[root@k8s-master1 ~]# vim myapp-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: cephfs-pv

namespace: dev

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteMany

cephfs:

monitors:

- 192.168.66.201:6789 #ceph集群mon节点地址和端口

- 192.168.66.202:6789

- 192.168.66.203:6789

user: admin #ceph的认证用户

path: /

secretRef:

name: ceph-secret #此处使用上面创建的secret资源中定义的secret名称

readOnly: false

persistentVolumeReclaimPolicy: Delete

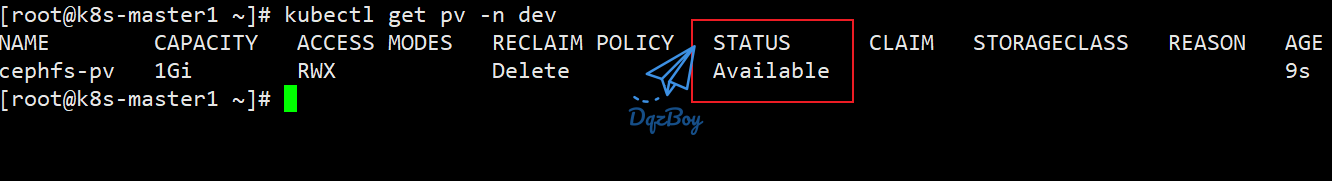

- 创建PV, 如果PV的状态为

Available,则说明PV创建成功,可以提供给PVC使用

[root@k8s-master1 ~]# kubectl apply -f myapp-pv.yaml

persistentvolume "cephfs-pv" created

[root@k8s-master1 ~]# kubectl get pv cephfs-pv -n dev

2、创建PVC

- 定义的文件如下:

[root@k8s-master1 ~]# vim myapp-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephfs-pvc

namespace: dev

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

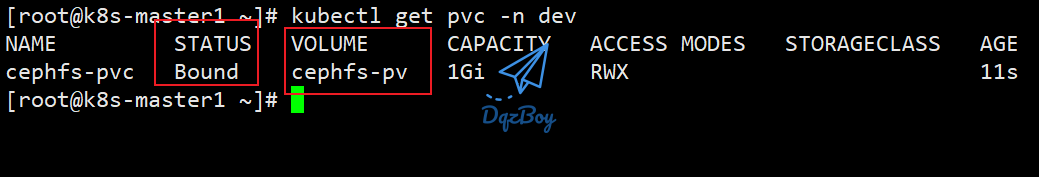

- **注意:**PVC的访问模式和存储大小必须和PV的匹配才能绑定成功

- 创建PVC资源:

[root@k8s-master1 ~]# kubectl apply -f myapp-pvc.yaml

persistentvolumeclaim/cephfs-pvc created

[root@k8s-master1 ~]# kubectl get pvc cephfs-pvc -n dev

3、创建Pod挂载该PVC

- 定义的文件如下:

[root@k8s-master1 ~]# vim myapp-pod-pv.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

app: cephfs-pv-myapp

name: cephfs-pv-myapp

namespace: dev

spec:

containers:

- name: cephfs-pv-myapp

image: busybox

command: ["sleep", "60000"]

volumeMounts:

- mountPath: "/mnt/cephfs" #映射至容器中路径

name: cephfs-myapp

readOnly: false

volumes:

- name: cephfs-myapp

persistentVolumeClaim:

claimName: cephfs-pvc #挂载的pvc卷名

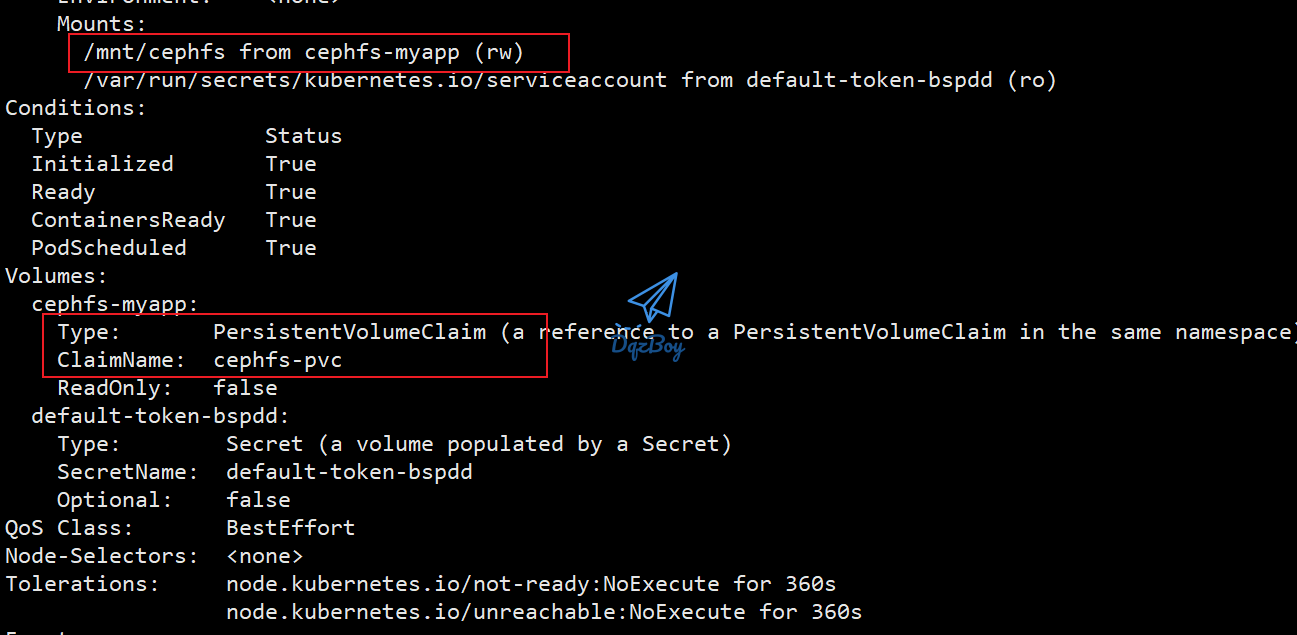

- 执行创建Pod,并查看cephfs卷是否挂载上

[root@k8s-master1 ~]# kubectl apply -f myapp-pod-pv.yaml

pod/cephfs-pv-myapp created

[root@k8s-master1 ~]# kubectl get po cephfs-pv-myapp -n dev

NAME READY STATUS RESTARTS AGE

cephfs-pv-myapp 1/1 Running 0 25s

[root@k8s-master1 ~]# kubectl describe po cephfs-pv-myapp -n dev

四、使用Cephfs provisioner动态分配PV

由于kubernetes官方并没有对cephfs提供类似ceph RBD动态分配PV的storageClass的功能。

补充: storageClass有一个分配器,用来决定使用哪个卷插件来分配PV。社区提供了一个cephfs-provisioner来实现这个功能,目前该存储库已经不再维护,但是可以使用

Cephfs provisioner已经不再维护,详细信息查看:https://github.com/kubernetes/org/issues/1563

- 接下来我们直接使用

cephfs-provisioner来进行试验:

1、创建admin-secret资源

#在ceph-mon节点查看admin用户的权限

[cephu@ceph-node1 ~]$ sudo ceph auth get-key client.admin|base64

QVFCK2RYeGZobWg0TFJBQU0zT3crWmRQNjRQeTFONVp2QmdLaUE9PQ==

#在K8S-Master节点创建名称空间cephfs

[root@k8s-master1 ~]# kubectl create ns cephfs

#在K8S-Master1节点上创建cephfs所以定义文件存储目录

[root@k8s-master1 ~]# mkdir ceph-cephfs

[root@k8s-master1 ~]# cd ceph-cephfs/

#在K8S集群Master节点上创建secret资源并使用ceph中转换后key

[root@k8s-master1 ceph-cephfs]# vim admin-secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: ceph-secret-admin

namespace: cephfs

type: kubernetes.io/rbd

data:

key: QVFCK2RYeGZobWg0TFJBQU0zT3crWmRQNjRQeTFONVp2QmdLaUE9PQ==

#执行secret定义文件

[root@k8s-master1 ceph-cephfs]# kubectl apply -f admin-secret.yaml

secret/ceph-secret-admin created

#查看secret资源信息

[root@k8s-master1 ceph-cephfs]# kubectl get secret -n cephfs

NAME TYPE DATA AGE

ceph-secret-admin kubernetes.io/rbd 1 82s

2、执行CephFS provisioner

- 创建

CephFS provisioner定义文件

[root@k8s-master1 ~]# vim storage-cephfs-provisioner.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: cephfs-provisioner

namespace: cephfs

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-provisioner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create", "get", "delete"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: cephfs-provisioner

subjects:

- kind: ServiceAccount

name: cephfs-provisioner

namespace: cephfs

roleRef:

kind: ClusterRole

name: cephfs-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: cephfs-provisioner

namespace: cephfs

rules:

- apiGroups: [""]

resources: ["secrets"]

verbs: ["create", "get", "delete"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: cephfs-provisioner

namespace: cephfs

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: cephfs-provisioner

subjects:

- kind: ServiceAccount

name: cephfs-provisioner

namespace: cephfs

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: cephfs-provisioner

namespace: cephfs

spec:

selector:

matchLabels:

app: cephfs-provisioner

replicas: 1

strategy:

type: Recreate

template:

metadata:

labels:

app: cephfs-provisioner

spec:

containers:

- name: cephfs-provisioner

image: "registry.cn-chengdu.aliyuncs.com/ives/cephfs-provisioner:latest"

env:

- name: PROVISIONER_NAME

value: ceph.com/cephfs

command:

- "/usr/local/bin/cephfs-provisioner"

args:

- "-id=cephfs-provisioner-1"

serviceAccount: cephfs-provisioner

- 执行定义文件

[root@k8s-master1 ceph-cephfs]# kubectl apply -f storage-cephfs-provisioner.yaml

serviceaccount/cephfs-provisioner created

clusterrole.rbac.authorization.k8s.io/cephfs-provisioner created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-provisioner created

role.rbac.authorization.k8s.io/cephfs-provisioner created

rolebinding.rbac.authorization.k8s.io/cephfs-provisioner created

deployment.apps/cephfs-provisioner created

- 检查资源状态信息

[root@k8s-master1 ceph-cephfs]# kubectl get pods -n cephfs |grep cephfs

cephfs-provisioner-6d76ff6bd5-pcz5m 1/1 Running 0 103s

3、创建一个Storage Class

[root@k8s-master1 ceph-cephfs]# vim storageclass-cephfs.yaml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: cephfs #注意:该名称后面创建pvc时要与之绑定

namespace: cephfs

provisioner: ceph.com/cephfs #使用外部cephfs

parameters:

monitors: 192.168.66.201:6789,192.168.66.202:6789,192.168.66.203:6789 #ceph集群mon节点地址和端口

adminId: admin #cephfs认证用户

adminSecretName: ceph-secret-admin #secret认证资源NAME名称

adminSecretNamespace: "cephfs" #secret所在的名称空间名称

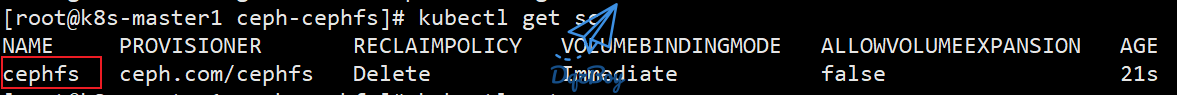

- 检查

cephfs storageClass是否创建成功

[root@k8s-master1 ceph-cephfs]#kubectl apply -f storageclass-cephfs.yaml

storageclass.storage.k8s.io/cephfs created

[root@k8s-master1 ceph-cephfs]# kubectl get sc

4、创建PVC并测试动态分配PV

[root@k8s-master1 ceph-cephfs]# vim cephfs-pvc-test.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephfs-pvc-test

namespace: cephfs

annotations:

volume.beta.kubernetes.io/storage-class: "cephfs" #注意:填写storage资源名称,上面创建的storage资源名称为【cephfs】

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

- 检查

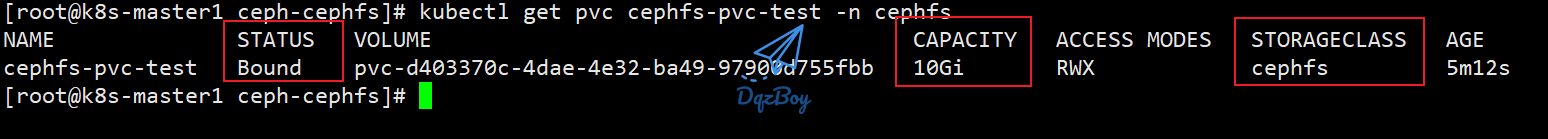

ceph-fs-test PVC是否成功的被cephfs storageClass分配PV。如果PVC的状态是Bound则代表绑定成功,如果状态是Pending或Failed则表示绑定PVC失败,请describe或者查看日志来确定问题出错在哪。

[root@k8s-master1 ceph-cephfs]# kubectl apply -f cephfs-pvc-test.yaml

persistentvolumeclaim/cephfs-pvc-test created

[root@k8s-master1 ceph-cephfs]#kubectl get pvc cephfs-pvc-test -n cephfs

- 如上图所示,PVC绑定PV成功,并且cephfs storageClass成功的分配一个匹配的PV给PVC

5、创建一个Pod绑定该PVC

- 验证是否成功的将

cephfs绑定到Pod中 - **注意:**PVC与Pod资源必须属于同一名称空间下才可以绑定成功

- **注意:**如果PVC删除了,那么关联的Pod数据也会被删除

[root@k8s-master1 ceph-cephfs]# vim cephfs-pvc-pod.yaml

kind: Pod

apiVersion: v1

metadata:

labels:

app: cephfs-pvc-pod

name: cephfs-pv-pod

namespace: cephfs

spec:

containers:

- name: cephfs-pv-busybox

image: busybox

command: ["sleep", "60000"]

volumeMounts:

- mountPath: "/mnt/cephfs"

name: cephfs-vol

readOnly: false

volumes:

- name: cephfs-vol

persistentVolumeClaim:

claimName: cephfs-pvc-test #Pod关联所要绑定的PVC名称

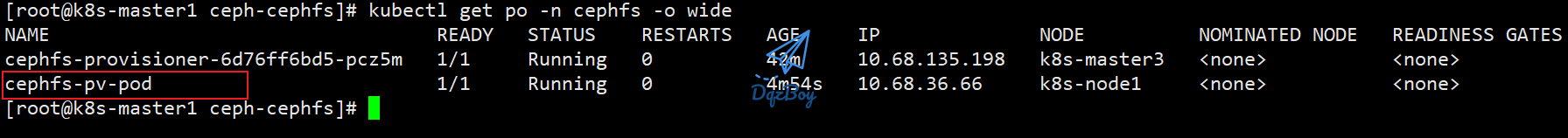

- 执行创建Pod,并检查是否挂载cephfs卷成功

[root@k8s-master1 ceph-cephfs]# kubectl apply -f cephfs-pvc-pod.yaml

pod/cephfs-pv-pod created

[root@k8s-master1 ceph-cephfs]# kubectl get po -n cephfs

NAME READY STATUS RESTARTS AGE

cephfs-pv-pod 1/1 Running 0 43s

[root@k8s-master1 ceph-cephfs]# kubectl get po -n cephfs -o wide

6、创建一个Nginx容器

6.1:创建Pod和PVC

- 创建定义文件

#创建一个名称空间,命名为webapp

[root@k8s-master1 ceph-cephfs]# kubectl create ns webapp

#pvc与pod所属名称空间都为webapp

#创建PVC

[root@k8s-master1 ceph-cephfs]# vim cephfs-pvc-nginx.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: cephfs-pvc-nginx

namespace: webapp

annotations:

volume.beta.kubernetes.io/storage-class: "cephfs" #指定绑定的storage名称

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

#创建Pod

[root@k8s-master1 ceph-cephfs]# vim cephfs-deploy-nginx.yaml

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

k8s-app: nginx-web

name: nginx-web

namespace: webapp

spec:

replicas: 1

revisionHistoryLimit: 10

selector:

matchLabels:

k8s-app: nginx-web

template:

metadata:

labels:

k8s-app: nginx-web

namespace: webapp

name: nginx-web

spec:

containers:

- name: nginx-web

image: nginx

imagePullPolicy: IfNotPresent

ports:

- containerPort: 80

name: web

protocol: TCP

volumeMounts:

- name: cephfs

mountPath: /usr/share/nginx/html #挂载至容器的路径

volumes:

- name: cephfs

persistentVolumeClaim:

claimName: cephfs-pvc-nginx #Pod关联所要绑定的PVC名称

- 执行定义文件

[root@k8s-master1 ceph-cephfs]# kubectl apply -f cephfs-pvc-nginx.yaml

persistentvolumeclaim/cephfs-pvc-nginx created

[root@k8s-master1 ceph-cephfs]#kubectl apply -f cephfs-deploy-nginx.yaml

deployment.apps/nginx-web created

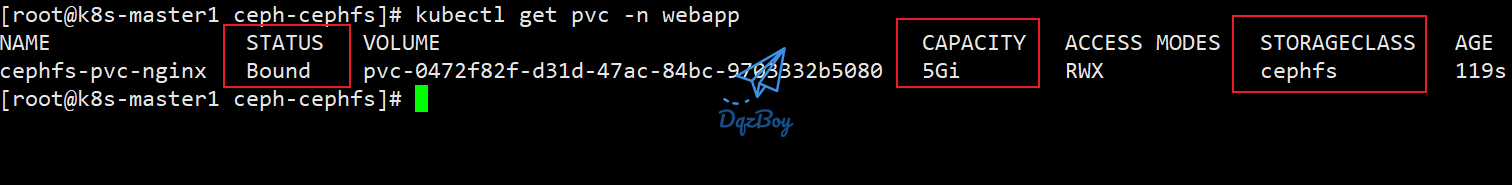

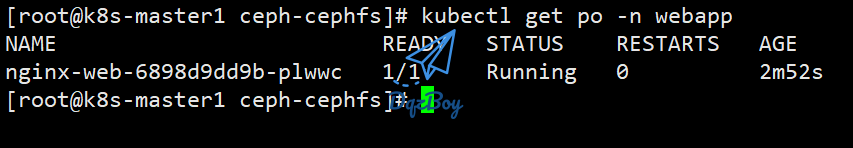

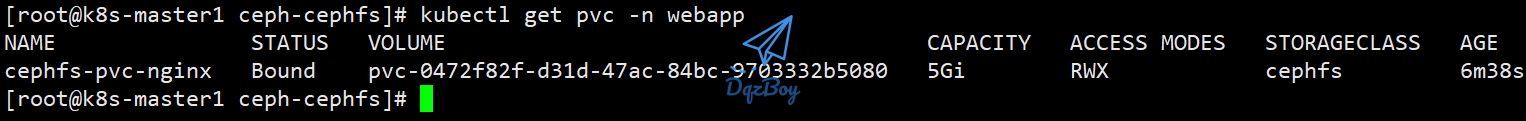

- 检查PVC绑定信息和Pod运行状态

[root@k8s-master1 ceph-cephfs]# kubectl get pvc -n webapp

[root@k8s-master1 ceph-cephfs]# kubectl get po -n webapp

6.2:修改文件内容

[root@k8s-master1 ~]# kubectl get po -n webapp

NAME READY STATUS RESTARTS AGE

nginx-web-6898d9dd9b-plwwc 1/1 Running 0 3m57s

[root@k8s-master1 ~]# kubectl exec -it nginx-web-6898d9dd9b-plwwc -n webapp -- /bin/bash -c 'echo hello cephfs-nginx > /usr/share/nginx/html/index.html'

6.3:访问Nginx Pod

[root@k8s-master1 ~]# kubectl get po -o wide -n webapp | grep nginx-web-6898d9dd9b-plwwc | awk '{print $6}'

[root@k8s-master1 ~]# curl 10.68.159.135

hello cephfs-nginx

6.4:删除Pod并重构

[root@k8s-master1 ~]# cd ceph-cephfs/

[root@k8s-master1 ceph-cephfs]# kubectl delete -f cephfs-deploy-nginx.yaml

deployment.apps "nginx-web" deleted

[root@k8s-master1 ceph-cephfs]# kubectl get po -n webapp

No resources found in webapp namespace.

[root@k8s-master1 ceph-cephfs]# kubectl get pvc -n webapp

- 重新创建一个pod并挂载到上面的PVC上,访问Pod检查是否数据还在

[root@k8s-master1 ceph-cephfs]#kubectl apply -f cephfs-deploy-nginx.yaml

deployment.apps/nginx-web created

[root@k8s-master1 ceph-cephfs]# kubectl get po -n webapp

NAME READY STATUS RESTARTS AGE

nginx-web-6898d9dd9b-lhhjf 1/1 Running 0 16s

[root@k8s-master1 ceph-cephfs]# kubectl get po -o wide -n webapp | grep nginx-web-6898d9dd9b-lhhjf | awk '{print $6}'

[root@k8s-master1 ceph-cephfs]# curl 10.68.159.136

hello cephfs-nginx